The European Union's AI Act: What You Need to Know

As the general public's awareness about the widespread application of artificial intelligence (AI) and machine learning technologies has skyrocketed in recent years, so, too, has demand for ethical safeguards and transparency regarding how AI-based systems are being used. With this objective in mind, the European Union in December 2023 announced that it had reached a provisional agreement regarding the basic content of the forthcoming Artificial Intelligence Act (AI Act or Act). The proposed legislation – expected to take effect between May and July 2024 – has since been released, allowing stakeholders an early glimpse into the structure of the AI Act.

The AI Act aims to establish a "comprehensive legal framework on AI worldwide" for "foster[ing] trustworthy AI in Europe and beyond, by ensuring that AI systems respect fundamental rights, safety, and ethical principles and by addressing risks of very powerful and impactful AI models." See the European Commission report on the AI Act. The newly created EU AI Office will oversee implementation and enforcement of the AI Act. The consequences for noncompliance can be hefty, ranging from penalties of €35 million or 7 percent of global revenue to €7.5 million or 1.5 percent of revenue, depending on the infringement and size of the company. See December 2023 EU Parliament press release. It is, therefore, critical that providers, developers and implementers of AI models or AI-based systems understand the forthcoming AI Act and its implications for their business.

AI Act Basics

First and foremost, the proposed AI Act applies to providers and developers of AI systems that are marketed or used within the EU (including free-to-use AI technology), regardless of whether those providers or developers are established in the EU or another country. AI Act Committee Draft, Title I, Art. 2(1) (Feb. 2, 2024); see also EU AI Act Explorer (Article 2). This means that, similar to the EU's General Data Protection Regulations (GDPR), American-based companies that market or provide AI-based technology within the EU may be subject to the Act’s potential penalties for noncompliance. The Act does not separately address AI systems that process EU citizens' personal information; however, it does state that existing EU law on the protection of personal data, privacy and confidentiality applies to the collection and use of any such information for AI-based technologies. AI Act Committee Draft at Art. 2(5a).

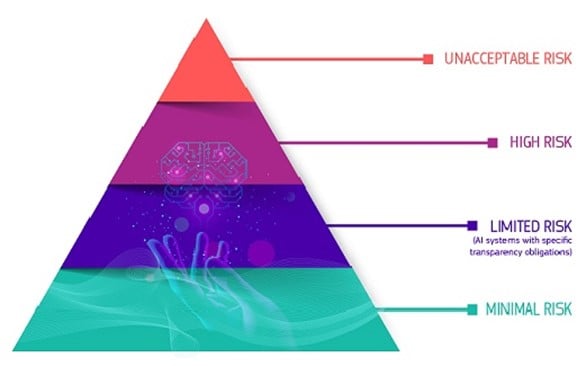

The AI Act adopts a risk-based approach for categorizing AI systems into four tiers. These tiers generally correspond to 1) the sensitivity of the data involved and 2) the particular AI use case or application. See the European Commission report on the AI Act.

Source: European Commission report on the AI Act

AI practices that pose "unacceptable risk" are expressly prohibited under the Act. These prohibited practices include marketing, providing or using AI-based systems that:

- use manipulative, deceptive and/or subliminal techniques to influence a person to make a decision that they otherwise would not have made in a way that causes or may cause that person or other persons significant harm

- exploit vulnerabilities of person(s) due to their age, disability or specific social/economic situation in order to influence the behavior of that person in a way that causes or may cause that person or other persons significant harm

- use biometric data to categorize individuals based on their race, political opinions, trade union membership, religious or philosophical beliefs, sex life or sexual orientation

- create or expand facial recognition databases through untargeted scraping of facial images from the internet or closed-circuit television (CCTV) footage

AI Act Committee Draft, Title II, Art. 5(1)(a)-(ba), (db); see also EU AI Act Explorer (Article 5). View a complete list of prohibited AI practices. AI practices that pose an unacceptable risk are subject to the greatest penalties and may expose companies to fines of €35 million, or 7 percent of a company's annual revenue, whichever is greater.

The "high risk" systems category is much more expansive than the "unacceptable risk" category and likely encompasses many AI applications already in use today. For example, high-risk applications of AI technology may include biometric identification systems, educational/vocational training or evaluation systems, employment evaluation or recruitment systems, financial evaluations or insurance-related systems. See AI Act Committee Draft, Art. 6, Annex III; see also EU AI Act Explorer (Article 6).

The precise boundaries for high-risk AI technologies, however, are not yet certain. The Act clarifies that systems that "do not pose a significant risk of harm[] to the health, safety or fundamental rights of natural persons" generally will not be considered high-risk, although it leaves the European Commission and AI Office 18 months to develop "practical" guidance that implementers can follow to ensure compliance with this requirement. AI Act Committee Draft, Art. 6(2a), (2c). Companies may be able to avoid certain restrictions applicable to high-risk systems by 1) conducting an appropriate assessment before that system or service is put on the market and 2) further providing that assessment to national authorities upon request. Id. at Art. 6(2b).

At a minimum, developers and implementer whose technology falls within the high-risk category should be prepared to comply with the following requirements of the AI Act:

- register with the centralized EU database

- have a compliant quality management system in place

- maintain adequate documentation and logs

- undergo relevant conformity assessments

- comply with restrictions on the use of high-risk AI

- continue to ensure regulatory compliance and be prepared to demonstrate such compliance upon request

See AI Act Committee Draft, Art. 16 (Obligations of Providers of High-Risk AI Systems) Art. 60 (EU Database for High-Risk AI Systems); see also EU AI Act Explorer (Article 16) (Article 60).

The AI Act also imposes transparency obligations on the use of AI and sets forth certain restrictions on the use of general-purpose AI models. For example, the Act requires that AI systems intended to directly interact with humans be clearly marked as such, unless this is obvious under the circumstances. See AI Act Committee Draft, Art. 52. In addition, general-purpose AI models with "high impact capabilities" (defined as general purpose AI models where the cumulative amount of compute used during training measured in floating point operations per second is greater than 1025 floating point operations (FLOPs)) may be subject to additional restrictions. See AI Act Committee Draft, Art. 52a(2); see also EU AI Act Explorer (Article 52a). Among other requirements, providers of such models must maintain technical documentation of the model and training results, employ policies to comply with EU copyright laws and provide a detailed summary about the content used for training to the AI Office. Id. at Art. 52c(1); see also EU AI Act Explorer (Article 52c).

Conclusion

This post highlights some of the biggest changes in the EU's forthcoming AI Act but is by no means a comprehensive list of all of the proposed changes (some of which remain to be seen). Regardless, whether applied to a provider, developer or implementer of AI technology, the AI Act has the potential to significantly change the way companies operate within the EU and globally. Leaders should take time to understand how these regulations may affect them and what strategies they can deploy to ensure they can operate in compliance with their obligations once the new law takes effect.